This is a viewpoint editorial by Aleksandar Svetski, author of “The UnCommunist Manifesto” and creator of the Bitcoin-focused language model Spirit of Satoshi.

Language designs are all the rage, and many individuals are simply taking structure designs (usually ChatGPT or something comparable) and after that linking them to a vector database so that when individuals ask their “model” a concern, it reacts to the response with context from this vector database.

What is a vector database? I’ll describe that in more information in a future essay, however a basic method to comprehend it is as a collection of details saved as portions of information, that a language model can query and utilize to produce much better actions. Imagine “The Bitcoin Standard,” divided into paragraphs, and saved in this vector database. You ask this brand-new “model” a concern about the history of cash. The underlying model will in fact query the database, pick the most appropriate piece of context (some paragraph from “The Bitcoin Standard”) and after that feed it into the timely of the underlying model (in most cases, ChatGPT). The model needs to then react with a more appropriate response. This is cool, and works okay in many cases, however doesn’t resolve the underlying problems of mainstream sound and predisposition that the underlying designs undergo throughout their training.

This is what we’re attempting to do at Spirit of Satoshi. We have actually constructed a model like what’s explained above about 6 months back, which you can go try here. You’ll observe it’s okay with some responses however it cannot hold a discussion, and it carries out truly improperly when it concerns shitcoinery and things that a genuine Bitcoiner would understand.

This is why we’ve altered our method and are developing a complete language model from scratch. In this essay, I will talk a little bit about that, to provide you a concept of what it involves.

A More ‘Based’ Bitcoin Language Model

The objective to build a more “based” language model continues. It’s shown to be more involved than even I had actually believed, not from a “technically complicated” viewpoint, however more from a “damn this is tedious” viewpoint.

It’s everything about information. And not the amount of information, however the quality and format of information. You’ve most likely heard geeks speak about this, and you don’t truly value it till you in fact start feeding the things to a model, and you get an outcome… which wasn’t always what you desired.

The information pipeline is where all the work is. You need to gather and curate the information, then you need to extract it. Then you need to programmatically tidy it (it’s difficult to do a first-run tidy by hand).

Then you take this programmatically-cleaned, raw information and you need to change it into numerous information formats (consider question-and-answer sets, or semantically-coherent portions and paragraphs). This you also require to do programmatically, if you’re handling loads of information — which holds true for a language model. Funny enough, other language designs are in fact great for this job! You utilize language designs to build brand-new language designs.

Then, due to the fact that there will likely be loads of scrap left therein, and unimportant trash created by whatever language model you utilized to programmatically change the information, you require to do a more extreme tidy.

This is where you require to get human aid, due to the fact that at this phase, it appears human beings are still the only animals in the world with the company required to separate and identify quality. Algorithms can sort of do this, however not so well with language right now — particularly in more nuanced, relative contexts — which is where Bitcoin directly sits.

In any case, doing this at scale is exceptionally difficult unless you have an army of individuals to assist you. That army of individuals can be mercenaries spent for by somebody, like OpenAI which has more cash than God, or they can be missionaries, which is what the Bitscoins.netmunity normally is (we’re really fortunate and grateful for this at Spirit of Satoshi). Individuals go through information products and one by one choose whether to keep, dispose of or customize the information.

Once the information goes through this procedure, you wind up with something tidy on the other end. Of course, there are more complexities included here. For example, you require to guarantee that bad stars who are attempting to mishandle your clean-up procedure are removed, or their inputs are disposed of. You can do that in a series of methods, and everybody does it a bit in a different way. You can evaluate individuals en route in, you can build some sort of internal clean-up agreement model so that limits require to be satisfied for information products to be kept or disposed of, and so on. At Spirit of Satoshi, we’re doing a mix of both, and I think we will see how reliable it remains in the coming months.

Now… as soon as you’ve got this lovely tidy information out completion of this “pipeline,” you then require to format it again in preparation for “training” a model.

This last is where the visual processing systems (GPUs) enter play, and is truly what the majority of people consider when they become aware of developing language designs. All the other things that I covered is normally overlooked.

This home-stretch phase includes training a series of designs, and having fun with the specifications, the information blends, the quantum of information, the model types, and so on. This can rapidly get costly, so you finest have some damn excellent information and you’re much better off beginning with smaller sized designs and developing your method up.

It’s all speculative, and what you go out the other end is… an outcome…

It’s extraordinary the important things we human beings summon. Anyway…

At Spirit of Satoshi, our outcome is still in the making, and we are dealing with it in a number of methods:

- We ask volunteers to assist us gather and curate the most appropriate information for the model. We’re doing that at The Nakamoto Repository. This is a repository of every book, essay, post, blog site, YouTube video and podcast about and associated to Bitcoin, and peripherals like the works of Friedrich Nietzsche, Oswald Spengler, Jordan Peterson, Hans-Hermann Hoppe, Murray Rothbard, Carl Jung, the Bible, and so on.

You can look for anything there and gain access to the URL, text file or PDF. If a volunteer can’t discover something, or feel it requires to be consisted of, they can “add” a record. If they include scrap however, it won’t be accepted. Ideally, volunteers will send the information as a .txt file together with a link.

- Community members can also in fact assist us clean up the information, and make sats. Remember that missionary phase I discussed? Well this is it. We’re presenting an entire tool kit as part of this, and individuals will have the ability to play “FUD buster” and “rank replies” and all sorts of other things. For now, it’s like a Tinder-esque keep/discard/comment experience on information user interface to tidy up what’s in the pipeline.

This is a method for individuals who have actually invested years finding out about and understanding Bitcoin to change that “work” into sats. No, they’re not going to get abundant, however they can assist contribute towards something they may consider a worthwhile task, and make something along the method.

Probability Programs, Not AI

In a couple of previous essays, I’ve argued that “artificial intelligence” is a problematic term, due to the fact that while it is synthetic, it’s not smart — and moreover, the worry pornography surrounding synthetic basic intelligence (AGI) has actually been totally unproven due to the fact that there is actually no danger of this thing ending up being spontaneously sentient and eliminating all of us. A couple of months on and I am a lot more persuaded of this.

I reflect to John Carter’s outstanding post “I’m Already Bored With Generative AI” and he was so area on.

There’s truly absolutely nothing wonderful, or smart for that matter, about any of this AI things. The more we have fun with it, the more time we invest in fact developing our own, the more we recognize there’s no life here. There’s no real thinking or thinking occurring. There is no company. These are simply “probability programs.”

The method they are identified, and the terms tossed around, whether it’s “AI” or “machine learning” or “agents,” is in fact where the majority of the worry, unpredictability and doubt lies.

These labels are simply an effort to explain a set of procedures, that are truly unlike anything that a human does. The issue with language is that we instantly start to anthropomorphize it in order to understand it. And in the procedure of doing that, it is the audience or the listener who breathes life into Frankenstein’s beast.

AI has no life aside from what you provide it with your own creativity. This is similar with any other fictional, eschatological risk.

(Insert examples around environment modification, aliens or whatever else is going on on Twitter/X.)

This is, obviously, really beneficial for globo-homo bureaucrats who wish to utilize any such tool/program/machine for their own functions. They’ve been spinning stories and stories considering that prior to they might stroll, and this is simply the most recent one to spin. And due to the fact that the majority of people are lemmings and will think whatever somebody who sounds a couple of IQ points smarter than them needs to state, they will utilize that to their benefit.

I keep in mind discussing guideline boiling down the pipeline. I observed that recently or the week previously, there are now “official guidelines” or something of the sort for generative AI — thanks to our governmental overlords. What this suggests, no one truly understands. It’s masked in the very same ridiculous language that all of their other policies are. The net outcome being, as soon as again, “We write the rules, we get to use the tools the way we want, you must use it the way we tell you, or else.”

The most absurd part is that a lot of individuals cheered about this, believing that they’re in some way more secure from the fictional beast that never ever was. In reality, they’ll most likely credit these firms with “saving us from AGI” due to the fact that it never ever emerged.

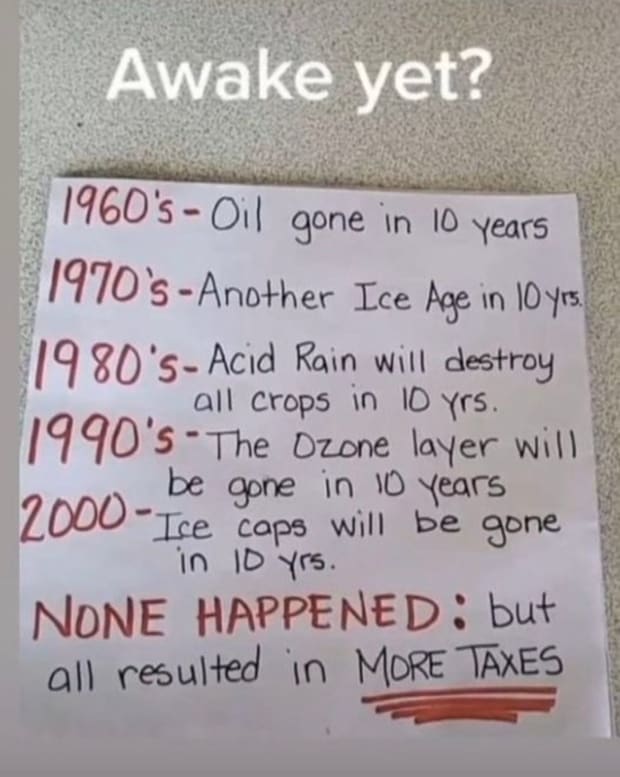

It advises me of this:

When I published the above photo on Twitter, the quantity of morons who reacted with real belief that the avoidance of these disasters was an outcome of increased governmental intervention informed me all that I required to understand about the level of cumulative intelligence on that platform.

Nevertheless, here we are. Once once again. Same story, brand-new characters.

Alas — there’s truly little we can do about that, aside from to concentrate on our own things. We’ll continue to do what we set out to do.

I’ve ended up being less fired up about “GenAI” in basic, and I get the sense that a great deal of the buzz is subsiding as individuals’s attention moves onto aliens and politics once again. I’m also less persuaded that there is something considerably transformative here — a minimum of to the degree that I believed 6 months back. Perhaps I’ll be shown incorrect. I do believe these tools have hidden, untapped capacity, however it’s simply that: hidden.

I believe we need to be more practical about what they are (rather of expert system, it’s much better to call them “probability programs”) which may in fact imply we invest less energy and time on pipeline dreams and focus more on structure beneficial applications. In that notice, I do stay curious and very carefully positive that something does emerge, and think that someplace in the nexus of Bitcoin, likelihood programs and procedures such as Nostr, something really beneficial will emerge.

I am confident that we can participate in that, and I’d enjoy for you also to participate in it if you’re interested. To that end, I will leave you all to your day, and hope this was a useful 10-minute insight into what it requires to build a language model.

This is a visitor post by Aleksander Svetski. Opinions revealed are completely their own and do not always show those of BTC Inc or Bitcoin Magazine.

Thank you for visiting our site. You can get the latest Information and Editorials on our site regarding bitcoins.